In 1960, the Ford River Rouge plant in Dearborn, Mich., was a hive of automotive production. Men and women in dirty white jumpsuits buzzed around an assembly line. Welders’ faces, shielded by massive helmets like those of early dive suits, lit up with flashes as they attached the limbs of steel skeletons with welding guns. Some workers ground the rough joints into smooth silver patches while others installed doors, hoods and bumpers. Nearly every accessory, from headlights to windshield to seats, was put in place and perfected by the nearly 40,000 people who worked at the plant.

Fast forward to 2012 and the scene had changed. In the Dearborn plant and others across North America, humans had largely been replaced by robotic giants. In just a few decades, new technology in the form of automation transformed the manufacturing industry.

Today, a new kind of technology is knocking on our doors. And, depending on who’s doing the predicting, it could have an impact as large as the increased automation of the 1980s, ’90s and 2000s. It’s called generative artificial intelligence.

For most, our first inkling of generative AI was in November 2022 when the artificial intelligence company OpenAI released a new iteration of its chatbot, ChatGPT, to the public. Similar bots from Google, Microsoft and myriad AI companies have followed, with promises to help users summarize information, write text, conduct background research or generate computer code, often in a matter of seconds. Other generative models can create images and video content almost on the spot. Unlike an artificial intelligence model that has been trained to suggest a Netflix show you might like, a generative AI model produces human-like responses to prompts from users. Meaning it can — theoretically — generate everything from a movie script to a will to a recipe for dinner.

Since its launch into public consciousness, questions have been swirling about the tool’s potential impact on our jobs, our learning, our security — even our ability to tell what’s real. Many agree that generative AI can be a useful tool and create efficiencies in many areas of life. But with AI becoming more powerful and increasingly integrated into our daily lives and tasks, there’s also concern that it could go so far as to challenge aspects of our humanity.

In March 2022, more than 30,000 people, including SpaceX founder Elon Musk and Apple co-founder Steve Wozniak, called for AI companies to pause developments for six months on AI systems more powerful than the technology behind ChatGPT. They argued we need to learn how to better manage these technologies and to consider to what extent we want them in our lives. Even Sam Altman, CEO of OpenAI, the company that created ChatGPT, told the U.S. Senate judiciary committee that “regulatory intervention by governments will be critical to mitigate the risks of increasingly powerful models.”

So, what do we need to know about this potentially history-altering tool? What should we expect and what should we watch out for? Is generative AI really all it’s cracked up to be? As we stand at the precipice of what could prove to be a new era in artificial intelligence, experts at the U of A — ranked among the world’s best for the study of AI — are working to help us navigate this new AI technology and the ones to come.

Why all the excitement?

Generative AI models are getting a lot of attention right now, but they’ve actually been around for years. U of A computing scientist Alona Fyshe, ’05 BSc(Spec), ’07 MSc, first started studying these models a decade ago. At that time, she says, it was nearly impossible to get one to recognize that two words rhymed.

Now, researchers are using sophisticated generative AI to do everything from sorting through troves of data to diagnosing disease more swiftly to connecting people with mental illnesses to appropriate resources.

Part of the reason for all the excitement now is the pace at which these models are improving. But what makes generative AI really impressive, says Fyshe, is that it marks a shift in the type of tasks artificial intelligence models could traditionally perform. Where historically, an AI model was trained to complete a single task — like predict every winning chess move — generative models can interact with users and produce text, code or images on an infinite number of topics. Not to mention that the release of ChatGPT marked the first time a relatively sophisticated, easy-to-use (and free) generative AI model was placed in the hands of the public.

Also unprecedented is the speed with which everyday people have snatched up the technology. Twitter took two years to reach one million users. Instagram took 2½ months. ChatGPT took five days.

“A large company put up a very easy-to-access website,” says Fyshe. “I think that had a huge impact.”

Eleni Stroulia, computing scientist and vice-dean in the Faculty of Science, agrees that the sudden accessibility of generative AI models is an important advancement. For one thing, it demonstrates the power of data, which are key to many modern algorithms.

“But ChatGPT is a very small slice of what AI work is about,” Stroulia says. “It’s very important for people to not lose sight of all these opportunities and all this potential just because of this important advancement that is grabbing our attention today.”

To Richard Sutton, a world-renowned professor of computing science based at the U of A, generative AI is not really AI at all. True artificial intelligence uses computational abilities to achieve goals, he says. He gives the example of AlphaGo, developed by U of A grads David Silver, ’09 PhD, and Marc Lanctot, ’13 PhD, along with Aja Huang. In 2016, it became the first computer program to defeat a human world champion in the complex board game of Go, which has 10 to the power of 170 possible board configurations.

In Sutton’s view, models like AlphaGo meet the criterion for true AI; generative models do not. He says generative AI models use computation to solve a hard problem, as in text summarization or language translation or protein folding. That’s not the same as being goal-directed agents. Ultimately, he says, the difference is a question of degree. But it is also fundamental because the limitation of large language models comes directly out of the way they are created.

“Generative AI is basically mimicking humans,” he says. “Their appearance of being goal-directed is superficial and shallow.”

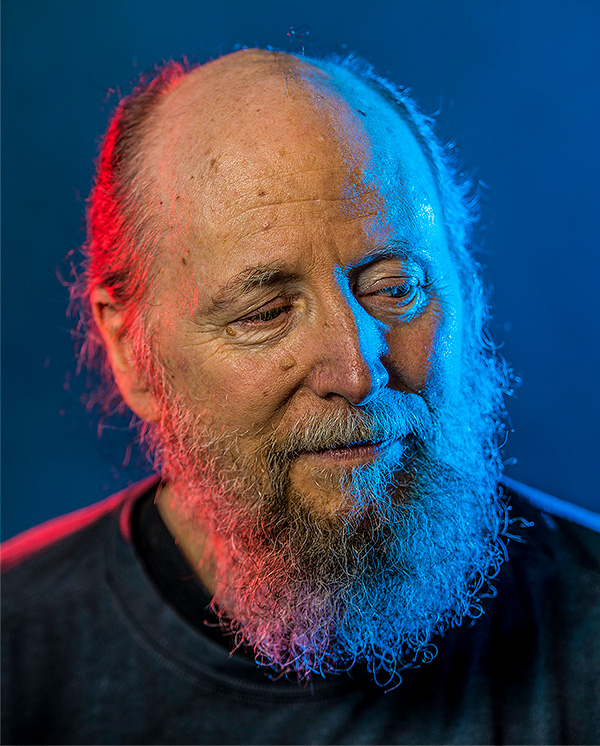

Sutton is chief scientific adviser at Amii, the Alberta Machine Intelligence Institute, in Edmonton — one of three national AI institutes in Canada. He has been working in the field of artificial intelligence since the 1980s. He is one of the pioneers of reinforcement learning, a type of machine learning in which models are trained to produce the best results by receiving a reward for each right decision they make.

While generative AI is the big topic of conversation right now, he says, “there’s so much more to artificial intelligence than this new generative AI stuff.”

Sutton and others at the forefront of the field are focused on developing artificial general intelligence, a type of AI that could theoretically accomplish any (and every) intelligent task a human could.

Generative AI models, while still a far cry from what experts might think of as truly intelligent machines, are a step in that direction.

“It used to be if we wanted to create a new model or do a new task, we had to train it from scratch,” says Fyshe. “When you could think about building a model through a text interface rather than having to train something special-purpose that could only do one thing, that was a new way of thinking.”

These new models stand to save immense amounts of time, money and other resources, she says, and allow AI development to progress faster than it would if researchers had to train a new model for every task.

“It’s just a fundamentally different way of solving AI problems,” says Fyshe.

What it can and can’t do

With generative AI making headlines around the world, and not always for its merits, some may be tempted to view the technology as a real-life HAL, the sentient AI system in 2001: A Space Odyssey that attacks the spacecraft’s crew members after they attempt to shut it down. But unlike 2001’s astronauts, experts at the U of A can tell us what’s actually going on behind the screen. And it’s not as scary — or even as smart — as it seems.

“Humans are famous for seeing intelligence where there is none,” Fyshe said in a 2023 TED Talk.

Generative AI models generate an output — at this point, mainly text, images or computer code — based on a specific dataset. Large language (also called generalist language) models such as ChatGPT and image generators like Dall-E and Adobe’s Firefly are considered generative AI.

To train a large language model, developers feed it millions of pieces of information from the internet. (ChatGPT 3.0 was trained using roughly 300 billion words.) A model detects language patterns such as what types of words are used, which topics are connected and how often certain words occur together. When given a prompt, a model responds by predicting the most probable words in a sequence based on the data that were fed into it.

For example, while it might take a writer 30 minutes to generate an outline for a feature article, ChatGPT can come up with one in five seconds. You could even get it to write the story. Just enter the prompt into the chat box, “Write me 1,500 words on the role of railways in the First World War” and it will spit it out in no time (with an enviable lack of self-doubt).

But — and this is important — large language models don’t always produce the right answers.

As with autocomplete in an email or text message, generative AI is filling in the blanks. A given model won’t actually “know” the answer you’re looking for. If it can’t find it in its mass of data, it might just make something up, a flaw that has been dubbed “hallucination.”

Fyshe is a CIFAR Artificial Intelligence chair — one of a number of leading researchers at the U of A and across the country funded to carry out fundamental and applied research and help train the next generation of leaders. She has been researching human brains and large language models to determine whether or not AI is really capable of understanding language the way we do.

In one study, Fyshe and her colleagues looked at electroencephalogram, or EEG, images of infant brains as the babies heard familiar words like “banana” and “spoon” and compared them with images of a language model’s neural network (the equivalent of a computer brain) prompted with the same words. The researchers found that the way infant and computer “brains” processed language was more similar than different. But that doesn’t equate to understanding, says Fyshe.

In her TED Talk, Fyshe draws on the Chinese Room Argument formulated by American philosopher John Searle in 1980. She compares language models like ChatGPT to a man sitting in a room surrounded by thousands of books outlining the rules and patterns for speaking Chinese. Someone slides a piece of paper with Chinese writing on it under the door, and the man has to respond. He doesn’t know the language himself but, using the resources around him, he’s able to respond to the text and slip a coherent answer back under the door. To an outsider, it appears the man in the room knows Chinese — but we know differently. “Under the hood, these models are just following a set of instructions, albeit complex,” she says.

The baby who hears the word “banana” might associate it with snack time, sweetness, a parent feeding them. The AI, however, does not. It has never opened a door or seen a sunset or heard a baby cry, Fyshe explains in the talk.

“Can a neural network that doesn’t actually exist in the world, hasn’t really experienced the world, really understand language about the world? Many people would say no.”

The implications for learning

Whether or not generative AI can boast of intelligence — and it probably could boast, if you asked it to — these models are already transforming the way we learn and interact with information. That’s raising all kinds of ethical and practical questions, especially in education. Is it cheating or is it a tool? Should we embrace it or blackball it? When evidence of students using generative AI sprung into homework assignments without warning in November 2022, some North American universities and high schools, including the entire New York City Public School district, responded by banning the technology completely (though the New York district later rescinded its ban).

Other institutions, including the U of A, are addressing the arrival of the new technology differently.

“The U of A’s approach has been largely: How do we support our students to use AI as a tool?” says Karsten Mundel, ’95 BA, vice-provost of learning initiatives. He chairs the Provost’s Taskforce on Artificial Intelligence and the Learning Environment, made up of professors and educational experts from a broad range of disciplines. The group was convened early in 2023 to help instructors and students navigate the use of generative AI responsibly.

The task force came up with a number of recommendations. Among them are prioritizing learning opportunities that improve AI literacy among the U of A community and, particularly for graduate students, creating opportunities to explore the intersections of AI with their field of study. The recommendations also encourage professors to include “purposeful statements about AI” in course syllabuses so that students are clear on how they are and aren’t allowed to use it.

“Students don’t want to cheat. That’s not the goal,” says Mundel, but if their classmates are using generative AI to write their essay outline — or even entire papers — students may feel pressure to do the same.

“It is a challenge but also an opportunity to reimagine the kinds of assessments that we’re doing and the ways in which we’re asking students to demonstrate achievement,” he says.

Some educators are embracing the technology as a learning tool. Geoffrey Rockwell, a professor of philosophy and digital humanities in the Faculty of Arts, sees the potential for large language models to help students process their ideas.

“There is a long tradition in philosophy of thinking through difficult topics with dialogue,” Rockwell wrote in an article for The Conversation . Unlike speeches or written essays, which can’t adapt to a listener or reader, a dialogue engages both parties and enables a two-way flow of ideas. Using a character generator like Character.AI, he says, students can engage in critical conversation with fictional characters and even question their ideas and philosophies.

Many universities, including the U of A, have given professors the power to decide if and how students use the technology in their classes. This has the potential benefit of familiarizing students with generative AI, including its risks and potential uses beyond generating a poorly written essay. When students learn how to use the technology responsibly, they can transfer those skills to the workplace.

That’s the idea behind the U of A’s new Artificial Intelligence Everywhere course, which is open to undergraduate students in any faculty. Fyshe and computing science professor Adam White , ’06 MSc, ’15 PhD, developed the course as part of a collaboration between the university and Amii. The goal is to demystify AI and give students the opportunity to consider how they might encounter — and harness — artificial intelligence in their fields of study. For example, says White, a chemistry student might start thinking about how a machine learning algorithm could assist them in future experiments, or a business student could consider how generative AI might benefit their startup.

“AI will continue to touch more and more aspects of people’s daily lives but also their careers,” says White. “It’s really important that students coming out of the U of A have an appreciation for those nuances.”

The course will soon be rolled out to other post-secondaries in Alberta, and the hope is to one day develop a massive open online course, or MOOC, so that anyone — not just university students — can better understand AI technology and its uses.

“AI literacy is critical, because we want people not to be afraid of technological advances,” says White. “The best way to do that is to understand them in some reasonable way.”

What about our jobs?

Technology has been transforming the way we work since the advent of the wheel. From the agricultural revolution to the humble washer and dryer, humans have sought out ways to ease the burden of labour.

Advances in technology can be beneficial in the long term to individuals and society — safer jobs, more interesting work, better health, more productive economies, a higher standard of living — but new technology can also create painful dislocation and disruption, not to mention the loss of jobs in the short term. With a year of generative AI under our belts, it’s a bit clearer what the technology is and isn’t very good at in the workplace.

“People are using it for the boring stuff, or to get started on something more interesting,” says Fyshe. “It’s not writing A+ essays or novels.” But, she notes, it’s pretty good at formulaic writing tasks. “It has changed the way a lot of people do their jobs,” she adds. And some companies are making the most of it.

Indian tech startup Dukaan made headlines in July for replacing 90 per cent of its support staff with an AI chatbot. German publisher Axel Springer announced it would cut hundreds of jobs, some of which would be replaced by artificial intelligence. Dropbox shared that it would reduce its workforce by 16 per cent, stating “the AI-era of computing has finally arrived” and the company needs a “different mix of skill sets” to continue growing.

Some employees have been fighting to prevent job disruption before it happens. TV and movie writers in Hollywood went on strike last year, in part after failing to come to an agreement with studios about the use of generative AI in scripts.

“Any time a technology comes into place that wipes out certain forms of work or even changes the division of labour, it’s going to challenge people’s identities and sense of self,” says Nicole Denier, an assistant professor of sociology who specializes in work, economy and society.

She points to a 2020 study out of the University of California that linked the opioid crisis in the U.S. to the increased automation of labour and the move to offshore manufacturing in the 1990s, which put many employees out of work. Using records of 700,000 drug deaths between 1911 and 2017, the author found “strong evidence” that the decline of state-level manufacturing in the labour market predicted as many as 92,000 overdose deaths for men and 44,000 overdose deaths for women.

“Certain segments of the working population lost their position in the social and economic hierarchy,” says Denier.

“Work is really foundational to many of our identities,” she says. Most adults spend around half their waking hours working. Our jobs are one of the first things we tell people about ourselves. Work has historically been so tied up in humans’ identities that many people adopted their trades as their family names (think of Miller or Smith). Denier’s own family speculates its surname may refer to the small gold coins minted by her French ancestors.

“I think what’s new about large language models is that they will largely touch jobs that require more formal education — so lawyers, professors, engineers. Occupations that have traditionally been protected from some of the previous waves of AI might finally see this impacting their occupations,” she says.

Denier encourages employees and employers to talk about whether and how generative AI tools should be used in the workplace. She adds it’s also important that academics and researchers from diverse fields, including history, languages and fine arts, weigh in on the matter.

“We need lots of conversations from lots of different people,” she says. “Not just tech companies, not just computer scientists, but the people who will be affected.”

Humans create tools all the time, she adds. It’s up to people to decide how they are used.

“How will it be implemented in workplaces? That’s still an open question.”

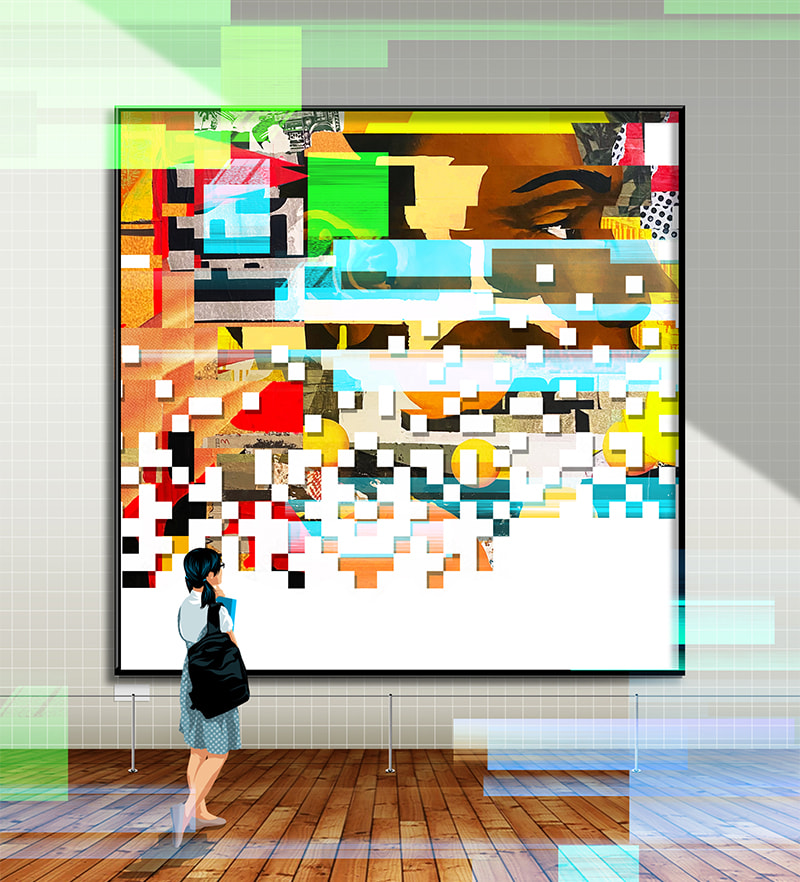

The creative quandary

Generative AI is cropping up in areas that are even more difficult to navigate. When it is used to create visual art, poetry, stories — forms that connect us to one another, teach us about ourselves and unite us in our humanity — we get into murky territory. For some, it’s a welcome tool that offers ideas, saves time and helps them work in tandem with technology. For others, it’s a cheap trick, further alienating humans from the art they create.

With AI-generated art entering the mainstream (Adobe’s newest version of Photoshop now includes generative technology), questions are cropping up about what makes something art, and who makes an artist. Last August, a Washington, D.C., court ruled against a computer scientist seeking copyright for a piece of visual art created by an AI system of his own making. The judge deemed that, based on centuries of understanding, human creation is a “bedrock requirement of copyright.” The scientist’s AI system did not pass the CAPTCHA.

“Art has a deep meaning for us,” says Rockwell. “We have a different relationship to art than we do to utilitarian objects. I need a stove to cook dinner. The paintings on my wall, I don’t need them to do something. They’re not tools to an end. They are the ends themselves.”

The adoption of tools in visual art is not new. From watercolour markers to drawing software, artists have made use of advancing technology as much as anyone else. But new technology can come with tradeoffs. Until the end of the 19th century, drawing was a form of basic literacy among educated people, says Rockwell. Soldiers needed to be able to map out routes and battle plans; archeologists had to sketch their discoveries. With the advent of photography, that need was largely eliminated. People still draw, but as a specialty, not a basic skill.

Art is one of those topics people can never quite agree on. Is a splotch of red paint on a page or a rotting banana in a gallery “art”? Adding generative tools to the mix complicates the conversation even further. How much can a tool do before it’s the tool’s piece of art and not the artist’s? What’s more, when AI models are generating art based on pieces created by other artists — many of whom may not have given permission — it creates a whole other kind of ethical conundrum.

While it’s becoming more common to see AI-generated images in magazines, on book covers and in advertisements, Rockwell thinks the novelty will eventually wear off. He suspects people will begin to seek out art forms that can’t be produced by AI, such as ceramics or theatre.

“Even if computers get to be extraordinarily effective at imitating the arts that we find entertaining, I think we will continue to reflect on works of art that we know were made by humans,” he says. “Because we see them as a way of learning about ourselves.”

How will we know what’s true?

More than two years after generative AI was first thrown open to the public, people have begun to realize it is not the workhorse they first thought it was. Enough stories have come out about the flaws — errors, hallucinations, downright silly answers — for users to realize its limits.

In June, a lawyer used the technology to generate a court brief that, unbeknownst to him, included phoney examples of similar cases. The mishap resulted in a disciplinary hearing for the lawyer and a warning for anyone using the tool without first checking the facts. “I did not comprehend that ChatGPT could fabricate cases,” he told the judge.

In November, a company called Vectara, founded by former Google employees, set out to test the accuracy of different chatbots by giving them 10 to 20 facts and asking them to summarize. The results? Vectara says depending on the chatbot, they invented information anywhere from three to 27 per cent of the time.

And it’s not just the lack of accuracy that’s of concern. It’s the possibility that the tool might be exploited by bad actors.

“I worry about its ability to manipulate,” says Fyshe. “That can happen with no ill intent, and that can happen with ill intent.”

Governments are starting to react to the unsavory possibilities of generative AI. Canada’s proposed Artificial Intelligence and Data Act is intended to “set the foundation for the responsible design, development and deployment of AI systems that affect the lives of Canadians.” But the country currently has no regulatory frameworks specific to AI. That means a lot of responsibility falls on the public to educate themselves about the technology.

White and Fyshe’s class is a step forward in creating a more AI-literate generation. The Provost’s Taskforce on Artificial Intelligence and the Learning Environment has plans to expand its guidelines for high school teachers to help better prepare students to deal with generative AI as they enter university.

“AI is touching, and will continue to touch, more and more aspects of people’s daily lives,” says White. Learning more about the tool and its capabilities will help alleviate unnecessary fear while encouraging caution where it’s justified.

“I encourage people to play around with it,” says Fyshe. “Just see what it can do.”

Growing pains

ChatGPT is not capable of a matrix-style takeover — not in its current form, anyway. But it, and models like it, already are changing aspects of our lives. Some of these are evident: like the need for new approaches to teaching and learning or the ways workplaces are embracing the technology as a tool for efficiency. Other repercussions, like our sense of truth, our identities and the relationship between creativity and humanity, are much less quantifiable.

Generative AI as a branch of artificial intelligence has come a long way. It marks a shift in the way AI models are trained and the types of tasks a single model can undertake. While these tools have clear limitations, they can be incredibly effective if used within those limitations.

Experts still disagree on the potential effects of the technology and how cautious we should be in incorporating it into our lives. Sutton, for one, wants people to reconsider their fears around artificial intelligence in general, especially as models improve.

“It’s not appropriate to be afraid of intelligence,” he says. “We believe education is good. And all the efforts we put in to try to make us smarter people have brought about our civilization,” he says. “More intelligence in the world would be a good thing.”

Others are more circumspect about letting the wheels of progress push us along too hastily.

“We need to challenge the rhetoric of inevitability,” says Rockwell, noting his concern for models like generative AI to be harnessed by people with hostile intent. “We need to, in some sense, empower our governments to regulate.”

Stroulia feels great optimism about the opportunities and benefits that AI advancements will bring, particularly in the U of A stronghold of reinforcement learning. But she emphasizes the need for people to become informed about AI and how it might affect our careers and lives.

“As citizens, we should be asking for legal frameworks to be placed around AI systems and, more generally, software systems that are embedded in government decision-making. Commercial software takes our data and uses it for multiple purposes. We should all be making sure that these activities are both allowed and amplified but also contained into proper laws that take care of people.”

The more we can educate ourselves about generative AI — and AI in general — the more we can understand, manage and benefit from its tremendous potential and steer clear of possible pitfalls.

Perhaps the furor over generative AI is a good thing. It has made many of us sit up and take notice. We are discovering its limitations and its potential. We are asking questions, learning and discussing. Perhaps many voices are just what society needs as we navigate the use of this new tool, and the AI advances to come, for the benefit of us all.

If the tools are in the hands of the people, as many believe they should be, then it’s possible we all have more power than we think in shaping the role of AI in society.

We at New Trail welcome your comments. Robust debate and criticism are encouraged, provided it is respectful. We reserve the right to reject comments, images or links that attack ethnicity, nationality, religion, gender or sexual orientation; that include offensive language, threats, spam; are fraudulent or defamatory; infringe on copyright or trademarks; and that just generally aren’t very nice. Discussion is monitored and violation of these guidelines will result in comments being disabled.